Wikidata, the first new project to emerge from the Wikimedia Foundation since 2006, is now beginning development. The organization, known best for its user-edited encyclopedia of knowledge Wikipedia, recently announced the new project at February’s Semantic Tech & Business Conference in Berlin, describing Wikidata as new effort to provide a database of knowledge that can be read and edited by humans and machines alike.

There have been other attempts at creating a semantic database built from Wikipedia’s data before – for example, DBpedia, a community effort to extract structured content from Wikipedia and make it available online. The difference is that, with Wikidata, the data won’t just be made available, it will also be made editable by anyone.

The project’s goal in developing a semantic, machine-readable database doesn’t just help push the web forward, it also helps Wikipedia itself. The data will bring all the localized versions of Wikipedia on par with each other in terms of the basic facts they house. Today, the English, German, French and Dutch versions offer the most coverage, with other languages falling much further behind.

Wikidata will also enable users to ask different types of questions, like which of the world’s ten largest cities have a female mayor?, for example. Queries like this are today answered by user-created Wikipedia Lists – that is, manually created structured answers. Wikidata, on the hand, will be able to create these lists automatically.

The initial effort to create Wikidata is being led by the German chapter of Wikimedia, Wikimedia Deutschland, whose CEO Pavel Richter calls the project “ground-breaking,” and describes it as “the largest technical project ever undertaken by one of the 40 international Wikimedia chapters.” Much of the early experimentation which resulted in the Wikidata concept was done in Germany, which is why it’s serving as the base of operations for the new undertaking.

The German Chapter will perform the initial development involved in the creation of Wikidata, but will later hand over the operation and maintenance to the Wikimedia Foundation when complete. The estimation is that hand-off will occur a year from now, in March 2013.

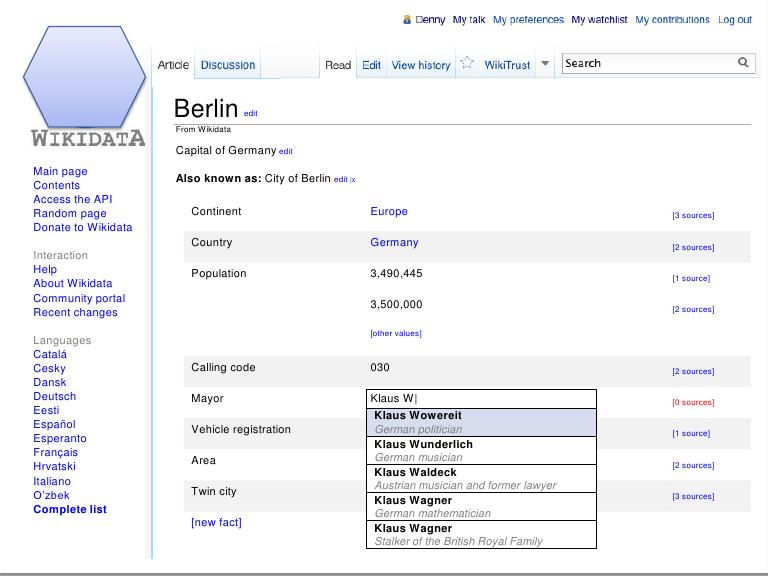

The overall project will have three phases, the first of which involves creating one Wikidata page for each Wikipedia entry across Wikipedia’s over 280 supported languages. This will provide the online encyclopedia with one common source of structured data that can be used in all articles, no matter which language they’re in. For example, the date of someone’s birth would be recorded and maintained in one place: Wikidata. Phase one will also involve centralizing the links between the different language versions of Wikipedia. This part of the work will be finished by August 2012.

In phase two, editors will be able to add and use data in Wikidata, and this will be available by December 2012. Finally, phase three will allow for the automatic creation of lists and charts based on the data in Wikidata, which can then populate the pages of Wikipedia.

In terms of how Wikidata will impact Wikipedia’s user interface, the plan is for the data to live in the “info boxes” that run down the right-hand side of a Wikipedia page. (For example: those on the right side of NYC’s page). The data will be inputted at data.wikipedia.org, which will then drive the info boxes wherever they appear, across languages, and in other pages that use the same info boxes. However, because the project is just now going into development, some of these details may change.

Below, an early concept for Wikidata:

All the data contained in Wikidata will be published under a free Creative Commons license, which opens it up for use by any number of external applications, including e-government, the sciences and more.

Dr. Denny Vrandečić, who joined Wikimedia from the Karlsruhe Institute of Technology, is leading a team of eight developers to build Wikidata, and is joined by Dr. Markus Krötzsch of the University of Oxford. Krötzsch and Vrandečić, notably, were both co-founders of the Semantic MediaWiki project, which pursued similar goals to that of Wikidata over the past few years.

The initial development of Wikidata is being funded through a donation of 1.3 million Euros, granted in half by the Allen Institute for Artificial Intelligence, an organization established by Microsoft co-founder Paul Allen in 2010. The goal of the Institute is to support long-range research activities that have the potential to accelerate progress in artificial intelligence, which includes web semantics.

“Wikidata will build on semantic technology that we have long supported, will accelerate the pace of scientific discovery, and will create an extraordinary new data resource for the world,” says Dr. Mark Greaves, VP of the Allen Institute.

Another quarter of the funding comes from the Gordon and Betty Moore Foundation, through its Science program, and another quarter comes from Google. According to Google’s Director of Open Source, Chris DiBona, Google hopes that Wikidata will make large amounts of structured data available to “all.” (All, meaning, course, to Google itself, too.)

This ties back to all those vague reports of “major changes” coming to Google’s search engine in the coming months, seemingly published far ahead of any actual news (like this), possibly in a bit of a PR push to take the focus off the growing criticism surrounding Google+…or possibly to simply tease the news by educating the public about what the “semantic web” is.

Google, which stated it would be increasing its efforts at providing direct answers to common queries – like those with a specific, factual piece of data – could obviously build greatly on top of something like Wikidata. As it moves further into semantic search, it could provide details about the people, places and things its users search for. It would actually know what things are, whether birth dates, locations, distances, sizes, temperatures, etc., and also how they’re connected to other points of data. Google previously said it expects semantic search changes to impact 10% to 20% of queries. (Google declined to provide any on the record comment regarding its future plans in this area).

Ironically, the results of Wikidata’s efforts may then actually mean fewer Google referrals to Wikipedia pages. Short answers could be provided by Google itself, positioned at the top of the search results. The need to click through to read full Wikipedia articles (or any articles, for that matter) would be reduced, leading Google users to spend more time on Google.